Set your own client.id/secret via googleAuthR::gar_set_client().Create your own Google Cloud Project or use an existing one.If you want to set it up yourself, the setup wizard goes through these steps: However, using the default project means you will not need to go through the process outlined below to create your own Google Cloud Platform credentials and you can directly authenticate using the ga_auth() function and your browser as soon as the library is downloaded. With the amount of API calls possible with this library via batching and walking, its more likely the default shared Google API project will hit the 50,000 calls per day quota. The default library is only intended for users to get to know the library looking for data as quickly as possible. You can not use the default project for scheduled scripts on servers - only interactivly. It is preferred to use your own and get your own verificataion process.

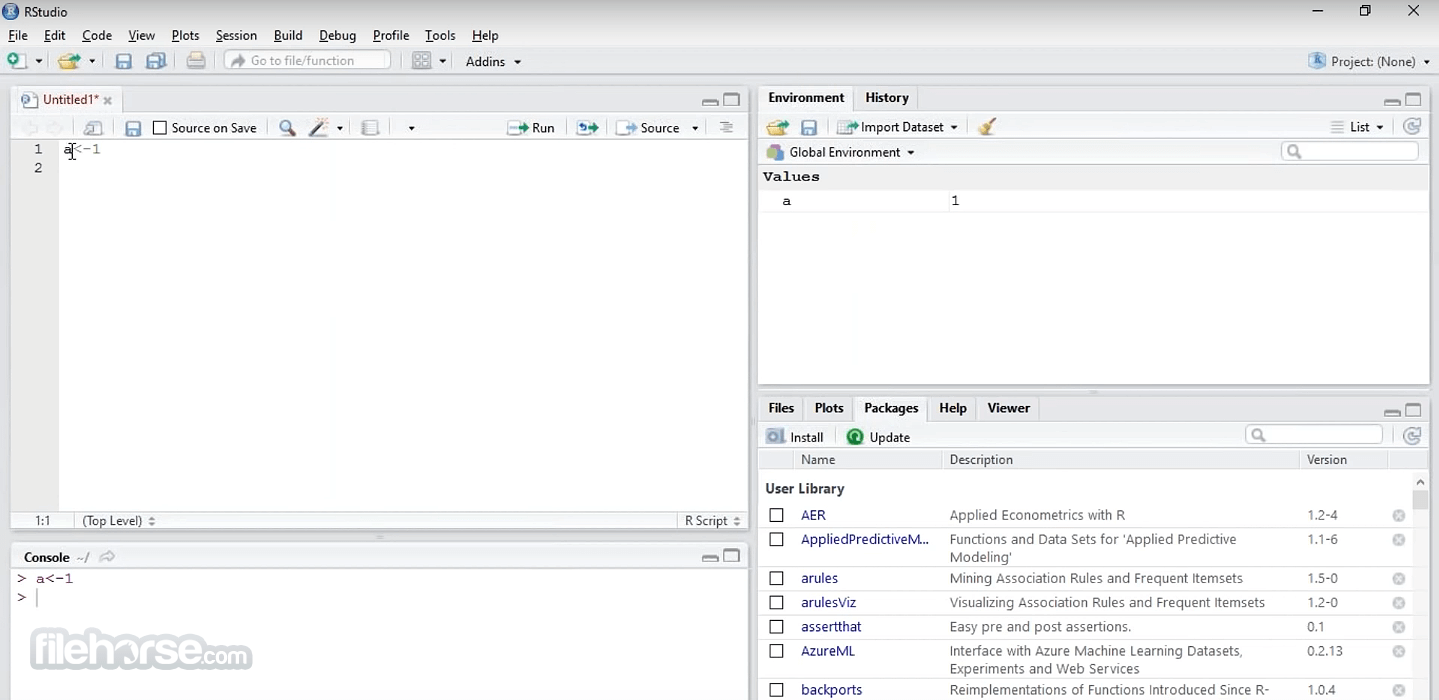

However since Google has changed verification processes, the consent screen for the default googleAnalyticsR library may warn of ‘danger’, which you will need to trust and accept if you want to continue using the default project. Even though it is a shared project other package users are not able to see and access your GA data. The fast authentication way of the previous section worked via the default Google Cloud Project for googleAnalyticsR which is shared with all googleAnalyticsR users. MyData <- as.ame(read_csv(file.path(data_dir, “something.‘Try-it-out’ mode: The default googleAnalyticsR project library(“data.table”)ĭata_dir <- gs_data_dir(“gs://your-bucket-name”) If you want it formatted as a data frame it should look something like this. You would then want to read data from the file path with read_csv(file.path(data_dir, “something.csv”)). Then you would need to use gs_data_dir(“gs://your-bucket-name”) along with specifying the file path file.path(data_dir, “something.csv”). I would assume you are accessing this data from a bucket? Firstly, you would need to install the “readr” and “cloudml” packages for these functionalities to work. There is one other way you can read a csv from your cloud storage with the TensorFlow API. MyData <- as.ame(fread(file="$FILE_PATH",header=TRUE, sep = ',')) Here is the code snippet I ran and managed to display the data frame for my data set: library(“data.table”) In order to run this code snippet make sure you install (install.packages("data.table")) and included the library library(“data.table”)Īlso be sure that you include the fread() within the as.ame() function in order to read the file from it’s location. I’ve tried running a sample csv file with the as.ame() function.

0 kommentar(er)

0 kommentar(er)